TRAILS Summer Fellows Develop Technology to Enhance Reliability in AI Responses for Blind Users

Blind and low-vision (BLV) individuals face daily challenges accessing visual information. AI tools can assist with tasks like reading medication labels, identifying objects in photos, or interpreting images—but they are sometimes unreliable, with some AI tools providing answers that sound realistic but are not always correct.

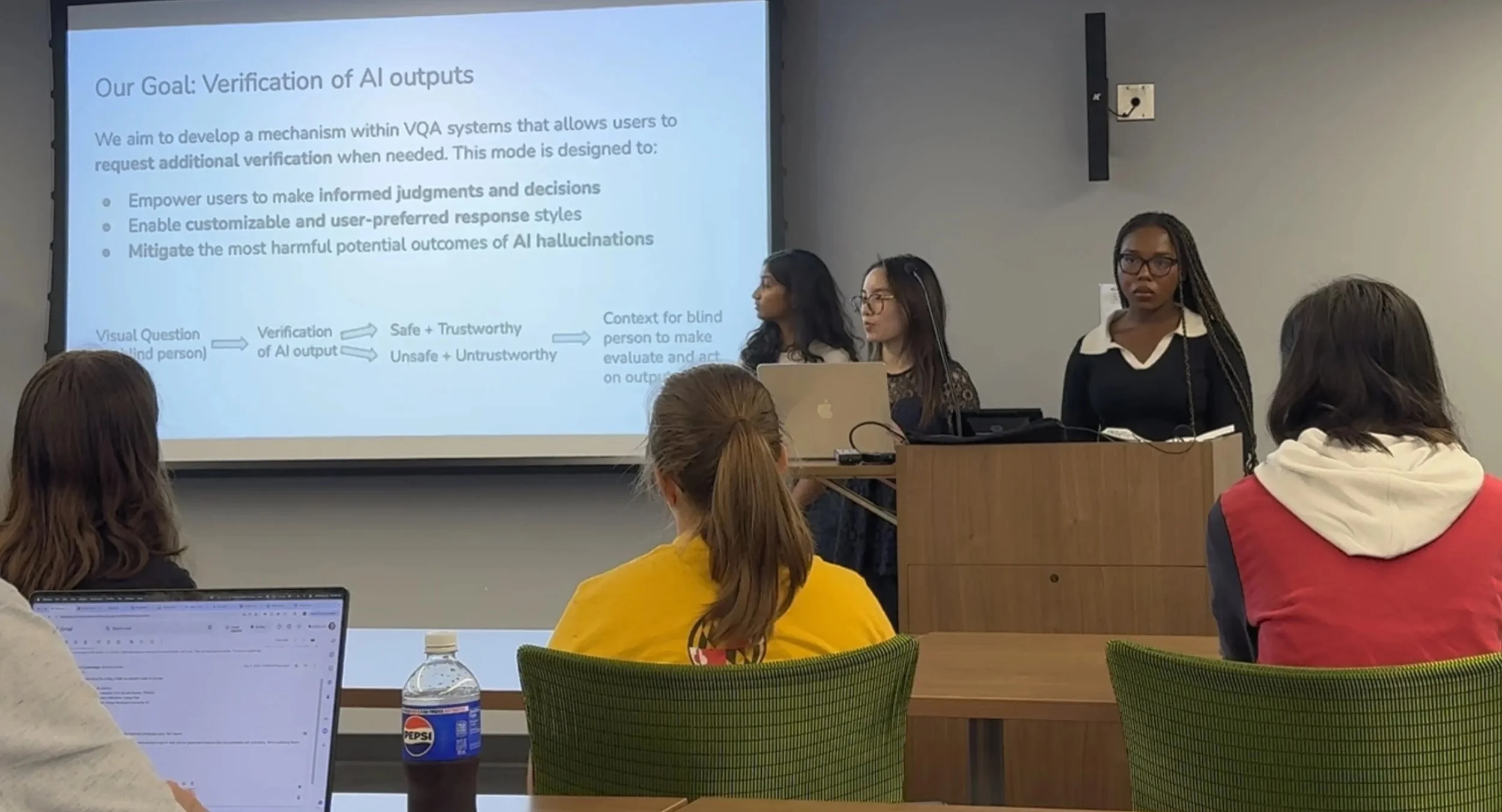

To address this, undergraduate summer research fellows in the Institute for Trustworthy AI in Law and Society (TRAILS) built a system that adds a verification layer beneath the surface to provide BLV users with more reliable AI-generated answers in real time.

The work was part of the 2025 Summer Undergraduate Research Fellowships (SURF) program in TRAILS, which engages students in interdisciplinary research on trustworthy AI.

The five students working on the project—Tyler Austin, Adaeze Azubike, Chaitra Bhumula, Robert Hopkins and Tina Zeng—spent 10 weeks gathering data, brainstorming ideas and developing a prototype mobile app for BLV users.

From the outset, the researchers say, the design of the technology was shaped through direct participation of the BLV community, ensuring that accessibility and trust were embedded in the system rather than added on later.

The app the team developed, called VerifAI, allows users to upload images and ask follow-up questions in conversations with an AI-infused Visual Question Answering (VQA) system.

Behind the scenes, verification layers help the AI provide more reliable responses, and the system can prompt users for follow-up actions, such as taking a clearer photo, when an answer might otherwise be inaccurate.

“The TRAILS summer fellows balanced technical and accessibility perspectives in a way that stood out,” says Farnaz Zamiri Zeraati, a fifth-year computer science doctoral student at UMD who oversaw the team. “They moved beyond a purely technical project to one that considered user trust and real-world usability.”

Other mentoring for the TRAILS summer fellows came from Hernisa Kacorri, an associate professor at UMD, and postdoctoral researcher Vaishnav Kameswaran, both members of TRAILS.

How does VerifAI work? The system is designed to check AI outputs by asking the same question numerous times about an image. It then examines how similar the answers are to each other to spot mistakes or unreliable responses. If the answers are sufficiently consistent, VerifAI presents them to the user. If not, it suggests taking another photo or asking a human, says Austin, a SURF fellow and senior at Morgan State University majoring in computer science.

Austin was tasked with building a mobile interface for VerifAI, using the Swift programming language and the CHATGPT-4o large language model to host a VQA system capable of generating responses from image-question pairs. He also experimented with voice-enabled navigation, a feature that the team hopes to refine in the future.

This hands-on work over the summer with VerifAI gave Austin a deeper perspective on the capabilities and limitations of AI tools.

“ChatGPT is a powerful tool that can do almost anything you ask,” he says. “It’s excellent for generating project outlines, but it’s definitely not a band-aid—you still need to question its outputs and stay open to feedback and criticism.”

Azubike, a UMD junior majoring in economics, focused on both technical and ethical aspects of the project.

“I helped analyze how BLV users interact with AI tools, rated classifier outputs for reliability, and participated in interviews with a BLV participant,” she says. “We realized ethics couldn’t be an afterthought, so we embedded it directly into classifier training and prompt evaluations.”

As part of this work, the team also developed and applied its own trustworthiness metric to better assess the reliability of AI-generated responses for BLV users. A future extension of this work could explore the handling of sensitive information, such as personal images or location data, and strengthening privacy protections.

Along the way, the TRAILS summer fellows gained experience in natural language processing methods, embeddings, evaluation metrics for generative AI, and accessibility datasets, while also practicing the full research cycle: reviewing literature, shaping questions, implementing solutions, iterating on feedback, and presenting results. The team plans to share their findings this fall through conference papers and presentations, extending the impact of their work beyond the lab.

“We are very proud of what our fellows have accomplished this summer, as well as the integral contributions students make to TRAILS research throughout the year” says Darren Cambridge, the managing director of TRAILS. “Our student researchers not only capitalize on transformative opportunities for experiential learning, but also substantively advance our understanding of trust and AI, guided by the concerns and preferences of the actual people whose lives the technology most affects.”

—Story by Melissa Brachfeld, UMIACS communications group