Research

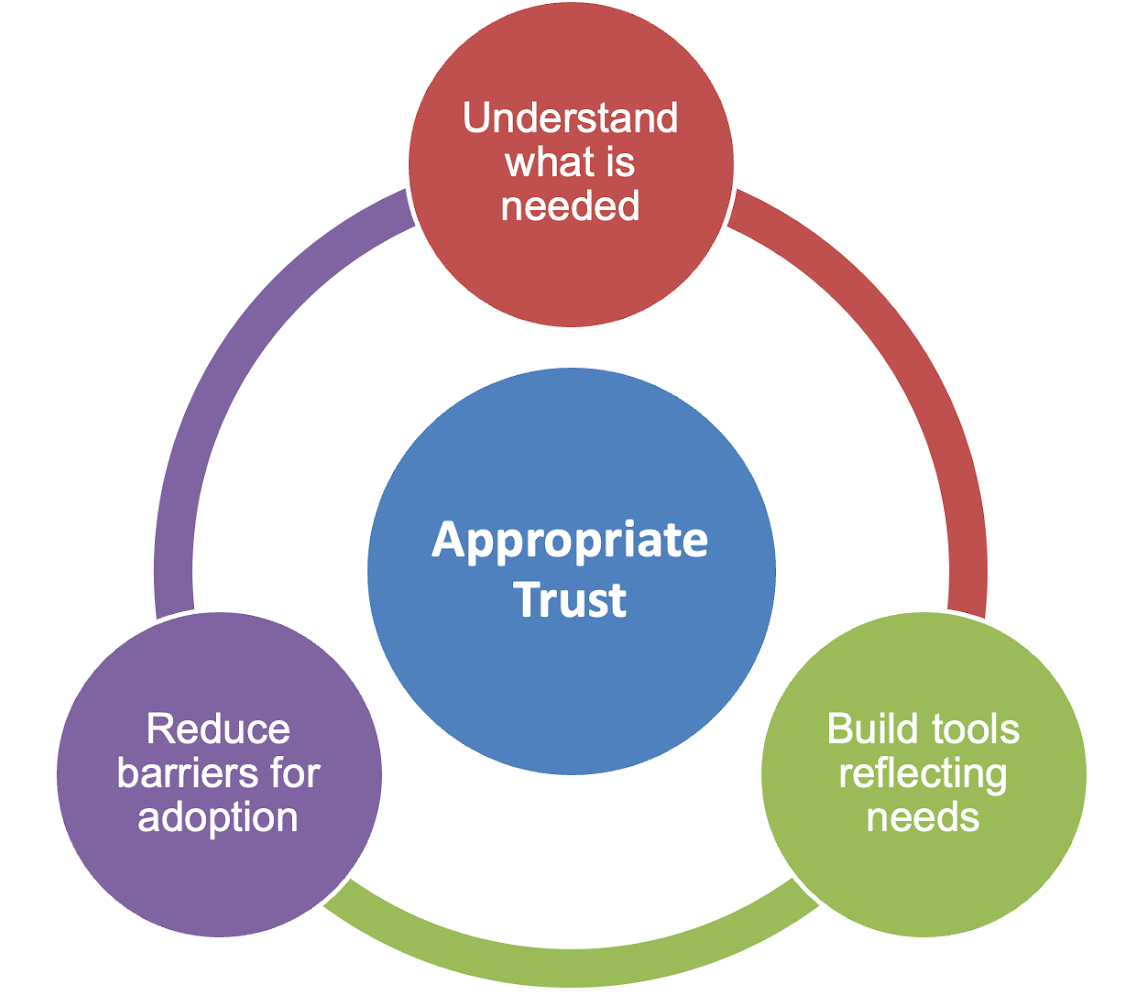

To achieve trustworthy AI, we must understand what is needed, build tools based on that understanding, and reduce barriers to adoption of those tools, all across a variety of specific contexts. To address these challenges, we pursue four strategic goals:

What is needed: TRAILS develops and studies participatory practices for trustworthy AI and in the governance of AI.

Reducing barriers: TRAILS develops and studies ethical and risk management practices, frameworks, and policies that lead to trustworthy AI.

Building tools: TRAILS develops methods and measures for assessing and increasing trustworthiness in AI-infused systems.

In context: TRAILS develops technical tools to study AI risks and trustworthiness in dynamic sociotechnical environments with feedback loops.

Our researchers pursue these questions with and across four key research “trails” with the goal of promoting the development of AI systems that can earn the public’s trust through broader participation in the AI ecosystem.

-

Participatory Design

We create new AI technology and advocate for its deployment and use in a way that aligns with the values and interests of a broader range of stakeholders to create opportunities for all Americans to actively shape and benefit from AI.

Lead: Katie Shilton, a professor in UMD’s College of Information who specializes in ethics and sociotechnical systems, particularly as it relates to big data.

-

Methods and Metrics

We develop novel methods, metrics and advanced machine learning algorithms that reflect the values and interests of relevant stakeholders, allowing them to understand the behavior and best uses of AI-infused systems.

Lead: Tom Goldstein, a UMD professor of computer science who leverages mathematical foundations and efficient hardware for high-performance systems.

-

Sense-Making

We effectively evaluate how people make sense of AI systems, and the degree to which their levels of reliability, fairness, transparency and accountability can lead to appropriate levels of trust.

Lead: David Broniatowski, a GW professor of engineering whose research focuses on decision-making under risk, group decision-making, system architecture, and behavioral epidemiology.

-

Participatory Governance

We explore how policymakers at all levels in the U.S. and abroad can foster trust in AI systems, as well as how policymakers can incentivize broader participation and accountability in the design and deployment of these systems.

Lead: Susan Ariel Aaronson, a research professor of international affairs at GW who is an expert in data-driven change and international data governance.

Morgan State faculty and students—led by Virginia Byrne, an assistant professor of higher education and student affairs—interact with all four research trails, focusing on community-driven projects related to the interplay between AI and education.

Federal officials at NIST will collaborate with TRAILS in the development of meaningful measures, benchmarks, test beds and certification methods—particularly as they apply to important topics essential to trust and trustworthiness such as safety, fairness, privacy, transparency, explainability, accountability, accuracy and reliability.

Research by TRAILS faculty, postdocs and students builds upon the combined strengths of the four primary institutions:

University of Maryland’s expertise in machine learning, artificial intelligence and the understanding of sociotechnical systems

George Washington University’s expertise in law, policy, governance, human-computer interaction and socio-technical systems engineering

Morgan State University’s expertise in developing forward-looking education modules and working with communities

Cornell University’s expertise in brain and behavioral sciences, psychometrics, risk and uncertainty, artificial intelligence, and law