TRAILS Announces Third Round of Seed Funding

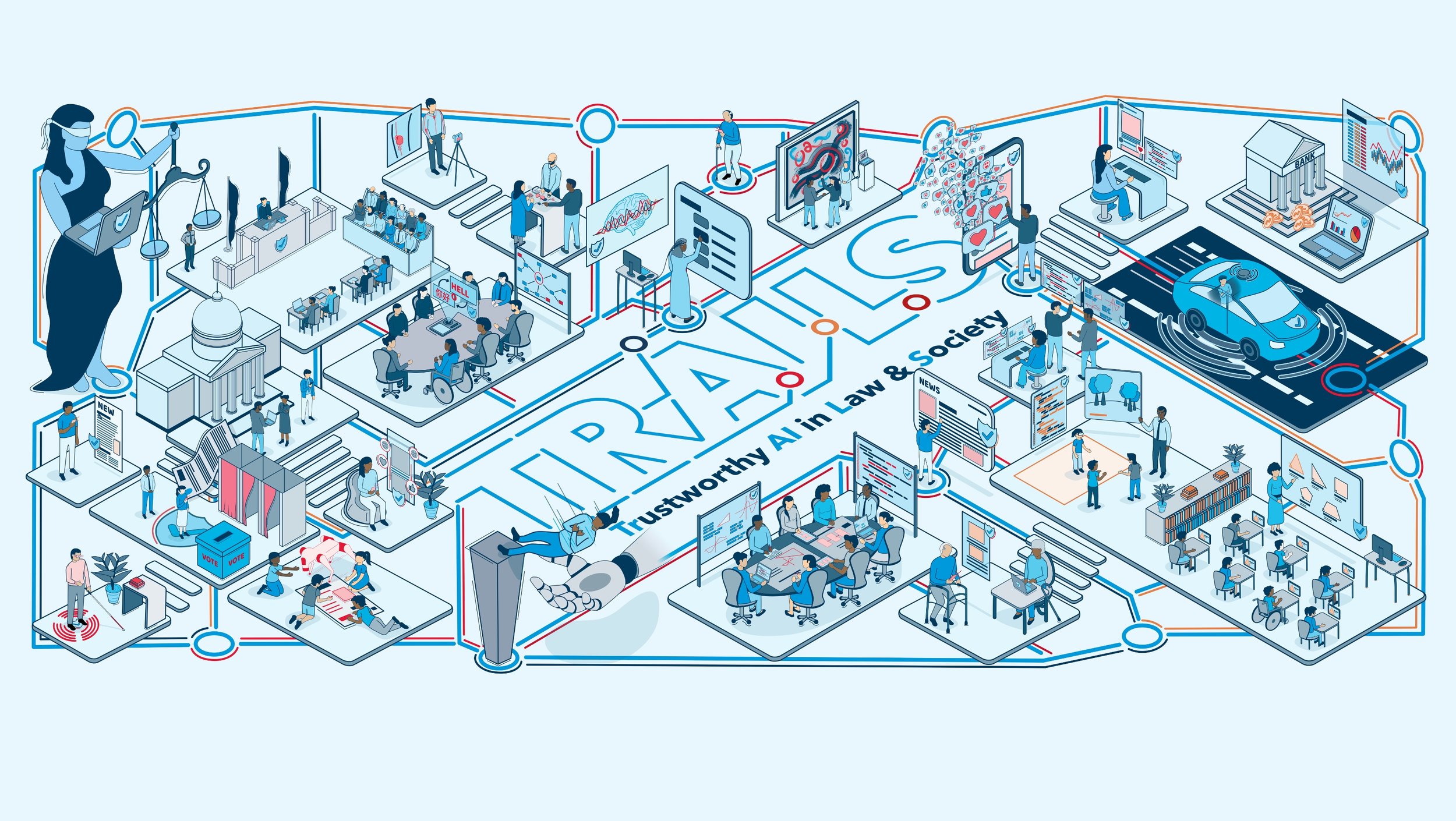

Launched in May 2023 with a $20 million award from the National Science Foundation and the National Institute of Standards and Technology, TRAILS is focused on developing, building and modeling participatory research that—over time—will increase trust in AI. Illustration courtesy of TRAILS

The Institute for Trustworthy AI in Law & Society (TRAILS) has announced a third round of seed funding, offering substantial support to a series of projects intended to transform the practice of AI from one that is driven solely by technological development to one that encourages tech innovation and competitiveness through cutting-edge science focused on human rights and human flourishing.

The seven seed grants announced on July 22, 2025—each between $50,000 to $150,000, totaling just over $750,000—were awarded to faculty and students from the University of Maryland, George Washington University, Morgan State University and Cornell University, representing all four of TRAILS’ academic institutions.

The interdisciplinary projects will address topics that include ensuring the trustworthiness of public safety information that large language models (LLMs) extract during disasters, identifying instructional needs for youth and families who wish to engage with AI, and helping more stakeholders participate more fully in the governance of AI.

The projects selected were chosen based on their potential to advance TRAILS’ four core scientific research thrusts: participatory AI design, methods, sense-making and governance. They also enact the institute’s commitment to advancing scientific knowledge in concert with educating and empowering AI users, said Hal Daumé III, a professor of computer science at the University of Maryland and the director of TRAILS.

“As we continue to expand our impact and outreach, we’re aware of the need to align our technological expertise—which is quite robust—with new methodologies we’re developing that can help people and organizations realize the full potential of AI,” Daumé said. “If people don’t understand and see what they care about reflected in AI technology, they’re not going to trust it. And if they don’t trust it, they won’t want to use it.”

The seed funding is just a first step toward moving many of these new initiatives forward, said David Broniatowski, a professor of engineering management and systems engineering at George Washington University. Broniatowski, who is the deputy director of TRAILS, says that ultimately, the TRAILS-sponsored research teams are expected to seek external funding or form new partnerships that will further grow their work.

He gives the example of a project funded during the first round of TRAILS seed funding in the fall of 2024, wherein researchers wanted to expand teaching best practices by modifying AI tools originally developed to support excellence in instruction in core subjects. That work led to a series of additional grants, culminating in a $4.5 million grant from the Gates Foundation/Walton Family Foundation to improve AI as a tool to strengthen math instruction and boost learning.

“We’re investing in the future of AI with this latest cohort of seed projects,” Broniatowski said. “These ambitious endeavors are strategically aligned, impact-driven initiatives that will advance the science underlying AI adoption, shaping future conversations on AI governance and trust.”

The seven projects chosen to receive TRAILS seed funding are:

• Adam Aviv and Jan Tolsdorf from GW and Michelle Mazurek from UMD are developing an auditing framework that lets people and organizations test context- and user-specific properties in large language models (LLMs) like ChatGPT. The team’s open-source technology is designed to broaden access to evaluation tools developed through prior TRAILS-supported research to assess trustworthiness in generative AI systems. The goal is to open new pathways for broader academic research and to encourage public participation in areas like cybersecurity “red teaming,” where benevolent hackers use LLMs to conduct non-destructive cyberattacks that can expose vulnerabilities.

• Sheena Erete, Hawra Rabaan and Tamara Clegg from UMD and Afiya Fredericks from Morgan State are examining how youth and families think about AI. They’re identifying the technical and instructional needs required to build a growth-oriented, AI-infused learning environment worthy of trust. The TRAILS team will implement a participatory design study that engages youth, parents and educators to understand how communities define AI literacy and perceive AI technologies, and what types of infrastructure are needed to support sustained AI education.

• Jordan Boyd-Graber and Mohit Iyyer from UMD are examining Question-Answering (QA) datasets and metrics, comparing human vs. computer capabilities as they relate to determining the trustworthiness of information gathered from searching large volumes of text. While there are existing multimodal QA datasets, many are artificial or gameable. The TRAILS team will address this void by collecting challenging multimodal visual QA examples online, presenting them to skilled human users, and using the results to diagnose the skills and weaknesses of current state of the art AI-driven multimodal models to further trustworthiness.

• Lovely-Frances Domingo, Maria Isabel Magaña Arango, Sander Schulhoff and Daniel Greene, all from UMD, are exploring how a new form of public red teaming—where multiple participants identify and report vulnerabilities, biases and other safety issues in AI models—can enhance the trust and safety of generative AI systems like ChatGPT. The TRAILS team plans to recruit participants and engage them in competition-style events that will offer data on how social and situational factors are influencing trust in generative AI, and how active participation with the technology influences the participants’ trust.

• Zoe Szajnfarber from GW is exploring novel pathways to increase participation and responsibility across stakeholders involved in the governance of AI ecosystems. Many current governance frameworks tend to focus on the AI model or data as the unit of analysis. This is in stark contrast to more mature domains, which enact a layered socio-technical system of governance that targets different risks for different levels and actors. By mapping the safety ecosystems from a sample of these domains, Szajnfarber will use systems engineering to help evolve AI ecosystem governance so that the technology can seamlessly perform important tasks like protecting human rights and removing barriers to innovation.

• Valerie Reyna and Sarah Edelson from Cornell and Robert Brauneis from GW are examining the “science of substantial similarity” in AI-related copyright litigation. Because AI companies often justify repurposing copyrighted materials as fair use, copyright cases hinge on cognitive perceptions of substantial similarity and transformativeness. The TRAILS team will conduct three sets of experiments, varying whether copyrighted works share gist-based (“total concept and feel”) versus verbatim-based (surface features) similarity in copyright case descriptions, and also varying explanations of computational transformation to potential jurors. They will then assess their perceptions of substantial similarity, transformativeness, and trust, as well as individual differences that shape that perception.

• Erica Gralla and Rebecca Hwa from GW are examining the use of LLMs in the high-stakes setting of disaster recovery to understand how to measure and improve the trustworthiness of the technology. Using data from the 2025 Los Angelas wildfires, the TRAILS team will examine how people decided to evacuate the danger zone based on multiple sources, including news media, social networks, and government updates, together with the aid of LLMs.